3.1. Decision Trees#

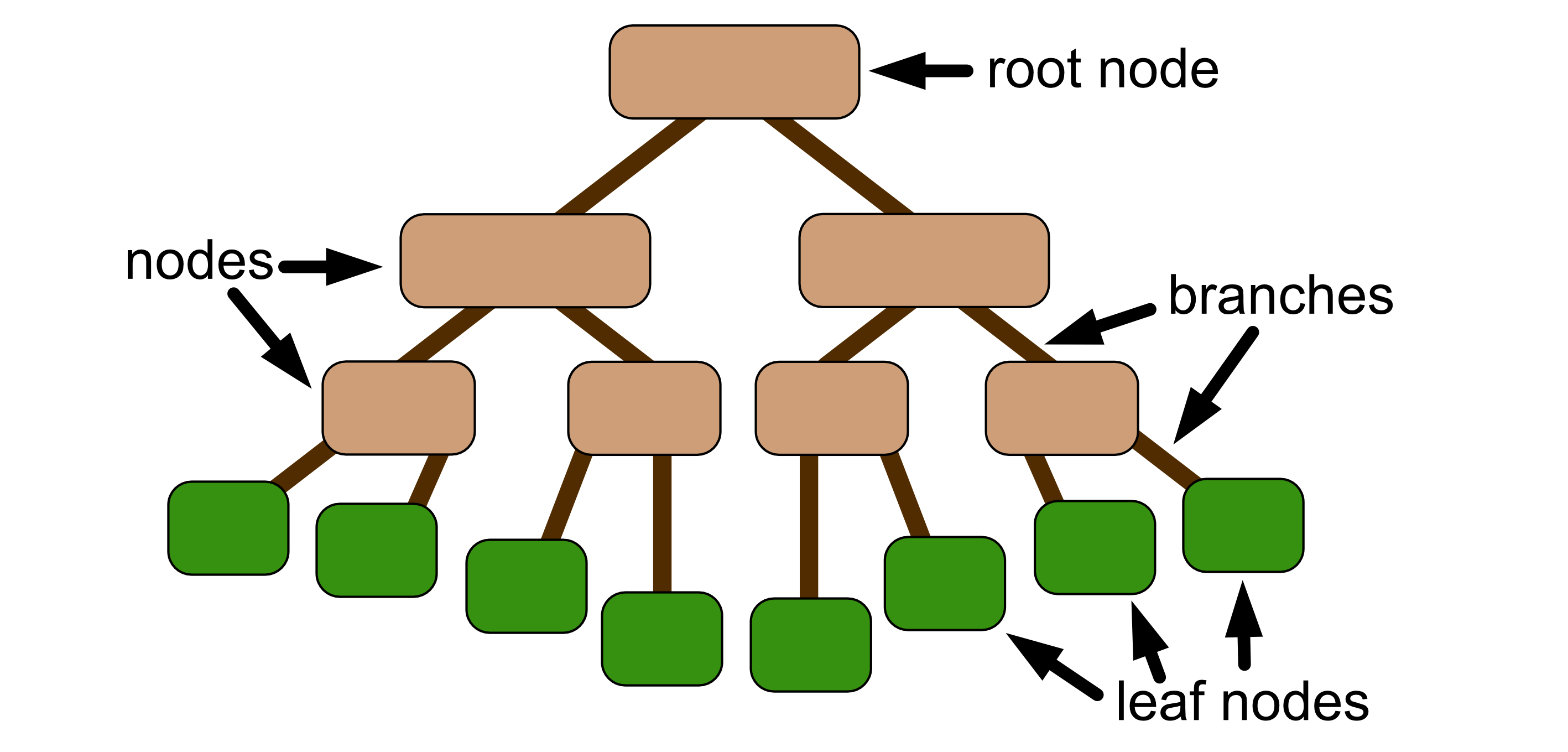

Decision trees are graphical trees that represents a decision making process. The tree is made up of nodes and branches. Each node represents a decision point and each branch represents the decision that is taken. The node at the top of the tree is called the root node, and this is the starting point of the tree. The nodes at then end (which do not have branches) are called the leaf nodes and they represent the final outcome/decision. This means a decision tree looks a bit like an upside down tree.

The decision pictured here is called a binary decision tree, since at each decision point there are two branches. Binary decision trees are probably the most commonly used decision tree.

Decision trees are typically a form of supervised learning, meaning that the tree is constructed based on labelled data. Depending on the data that is used you can build:

A classification tree, which is a decision tree for classification

A regression tree, which is a decision tree for regression